What Is Vibe Coding and How Do You Get Started? (Even If You're Non-Technical)

The hardest part of automating your life has nothing to do with code.

(Here for AI news? Scroll to the very bottom for recent AI headlines you should know about.)

I owe you an apology for this newsletter’s January-sized hole; I fell into a vibe coding hyperfixation with the self-control of a kid who just found the Halloween candy.

What’s vibe coding? I wrote an earlier post about it here, but for those who missed it, it’s an AI-powered approach to getting stuff automated: you describe what you want in plain English and a chatbot (Claude, ChatGPT, Gemini, one of their cousins) writes the code for you.

Let’s revisit the vibe coding topic as an orientation for folks who think of themselves as non-technical (not for long!) and help you understand why you (yes, you!) should try it as soon as possible.

It’s finally worth your time

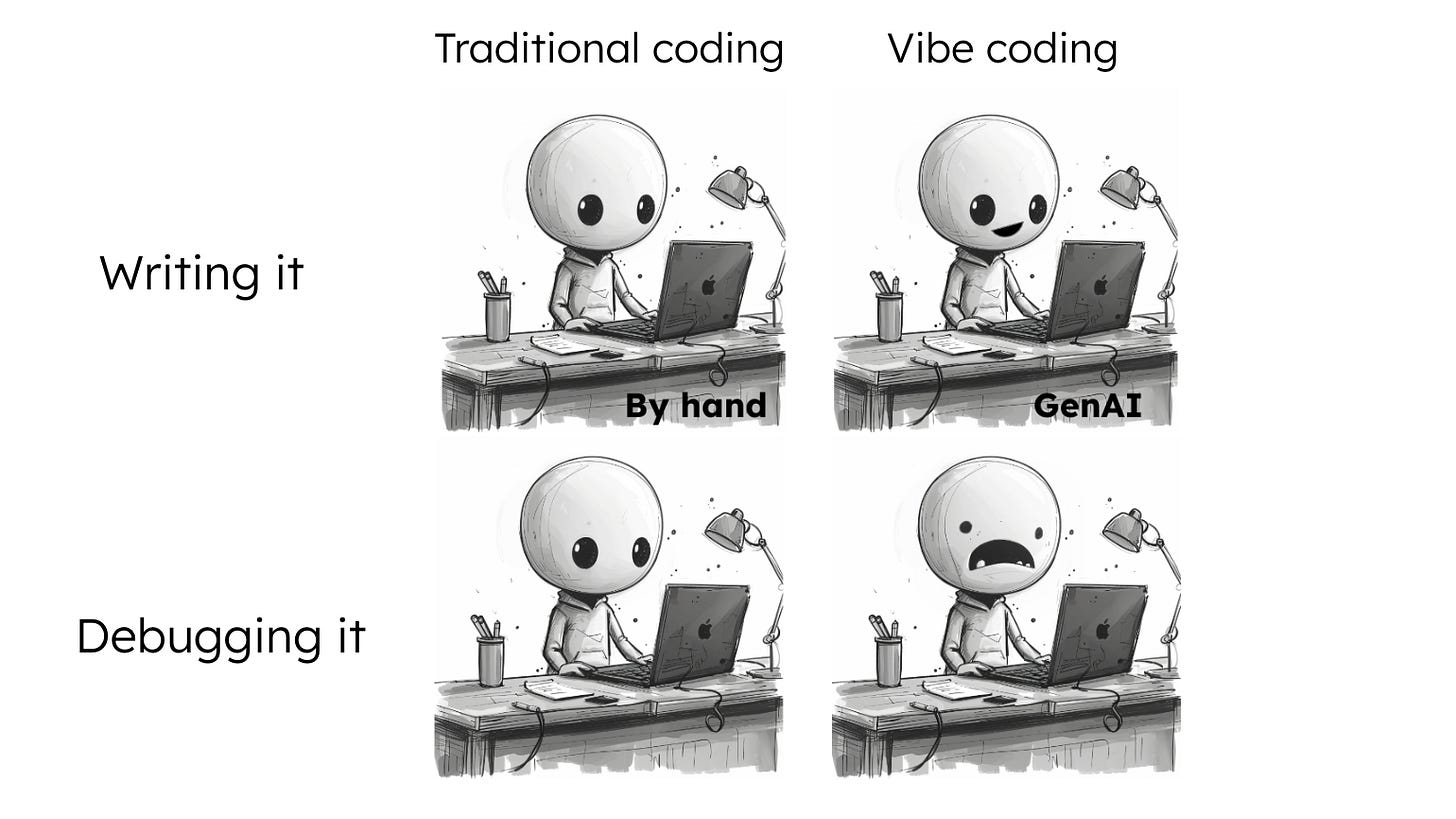

When the term was coined a year ago, the reigning sentiment was still equal parts fabulous and facepalm:

Back then, I wouldn’t blame you for finding AI’s overconfident buggy output pretty annoying. AI was great as a patient tutor explaining small snippets of code, but hardly reliable if you need hundreds of error-free lines in one go.

Today, I’m addicted. Between a fresh crop of model upgrades, greatly enlarged context windows, and a severe shortage of self-restraint on my part, my coding wishlist got out of hand in the best possible way.

But first, a quick ad break: My new course will run on-demand soon, with a 2 hour live Q&A session on Sunday Mar 15 at 11 AM - 1 PM Eastern Time. 👇 Scroll to the bottom for a discount code.

How do you vibe code?

How do you vibe code? You literally just ask for whatever you want. You type it in plain English or say it into the microphone. Try it right now in any chatbot. I’ll wait.

Except if you’ve never done it before, you might not know what to ask for. Turns out that the hard part isn’t the doing, it’s the wanting.

The hard part isn’t the doing, it’s the wanting.

The skills you need to upgrade to get started with vibe coding:

(1) How to know what you want. (Vision + Imagination + Knowing Your Options)

(2) How to wish... less dangerously. (Translation + Modularity + Asking Questions)

How to know what you want

Most of us don’t know what we want, so AI is wasted on us. If you’re still using AI like it’s Google Search, you probably don’t know how to want anything badly enough to tap into what it’s capable of.

We all find it hard to muster the energy to dive into something with uncertain payoffs and uncertain learning curve — the antidote is a burning need to drag your forward.

Keeping up with the Joneses or collecting bragging rights will get you to open the chatbot, sure, but it won’t sustain you through the twentieth iteration of a script that still isn’t quite right. For that, you need something real: a concrete, specific vision of your life made better. And in a culture that somehow manages to both worship ambition and shame us for wanting things, a lot of us never learned how to sit with that question honestly.

What about imagination? Deloitte found that only about a third of enterprises are using AI to actually reimagine what they do (new products, reinvented processes, transformed business models). [1] In other words, only a third of them have a handful of people on staff with enough imagination to step into the future and pull their companies along with them.

Imagination is rare. It’s also a skill you should practice for the AI era. Henry Ford’s old line applies here: “If I had asked people what they wanted, they would have said faster horses.” Most people think they want a faster horse, not a whole new way of doing things.

It takes courage to stare at the blank page and ask “what would I build if I could build anything?” before jumping into the how. That question is the hard part. The AI handles the rest surprisingly well.

The irony is that:

I believe what I just said. We could all benefit from a bit more imagination and in lessons in wanting. At the very least, it would help prevent a lot of career misery. “People may spend their whole lives climbing the ladder of success only to find, once they reach the top, that the ladder is leaning against the wrong wall”.

Did this help you figure out what to vibe code? If you’ve never done it before, probably not. So let me cut the inspirational talk and go concrete.

Programmers have a principle called DRY (“Do Not Repeat Yourself”). Your first bit of homework for vibe coding has nothing to do with code and everything to do with auditing your day for opportunities to rescue your precious time. Whenever you find yourself doing something repetitive, make a note of it.

Once you’ve got a wishlist, find the easiest win. Look for the time sucks that add up quietly, the ones you barely notice because you’ve been living with them for years. Think about it: fifteen minutes a day is nearly a hundred hours a year. That’s worth taking 2 weeks to learn to automate, and I bet you can do it two weekends.

Finding the thing worth automating is the hard part. The rest is much easier than you think.

Once you have your target wish for automation, start vague and use AI to help you brainstorm concrete specs. Record yourself describing (in your mother tongue) exactly how you want it to work. Speak straight into a chatbot or (if you have a wobbly internet connection or an unusual accent) use a recording app and get transcribe it with a tool like otter.ai.

Now ask AI a lot of questions and ask it to ask you a lot of questions back. Find out what’s possible and what the components/tools are that will help you along your way. AI has read all the documentation so you don’t need to. You just need to know what you want and ask which bits are possible and how much effort each thing takes so you know whether your project can be done today or needs to wait a few months for more capable releases.

Brainstorm an approach to fixing that time suck, breaking the big wish down into concrete baby steps. And resist the urge to start grand; ask about simple ways to get part of the way there first. You can always add sophistication later.

For example, one of my time sucks used to be sorting my email for revisiting at a better time. I love Gmail’s filtering and snoozing capabilities, but they’re not custom enough for me, so I vibe coded a bunch of Apps Script automations to run my inbox exactly how I want, down to the minute. Since I’m not the only person in the world who hates email — essentially a to-do list that other people can add items to (!) — it’s worth exploring inbox customization as a first project for vibe coding.

You don’t need to arrive at the chatbot with a fully formed vision. You just need to arrive curious and aware of your needs. Then complain out loud to the chatbot and ask for ideas on how improve things, zooming in on baby steps you can take. Sometimes, the answer will be existing features or a new app, but often the thing you really want is something you’ll need to build for yourself. And it’s easier than ever.

How to wish... less dangerously

Writing code you don’t understand to run your inbox? What could possibly go wrong? Everything. But that’s not a good enough reason to avoid vibe coding. Sure, spending ten years as a professional developer and understanding every line you ship is one way to stay safe. But it is not the only way. You can also learn to supervise what you can’t read… and that’s a skill worth a lot more than syntax. It might be the AI skill of the near future.

The next post in this series will be about exactly that. I’ll break it down for you, but for summary version, it comes down to three things.

First, treat AI as a translator working for you in both directions: your job is to specify your intent clearly enough that nothing important gets lost, then verify the output by getting it translated back to plain English.

Second, keep your wishes bite-sized and stack them. Small modular automations, tested separately, connected once they work. A single sprawling wish is impossible to debug. A dozen tiny ones are each trivially easy.

Third, ask questions relentlessly. If something is unclear to you, it’s unclear. Don’t nod along. Paste it back into the chatbot and say “explain this to me like I’ve never seen code before.” Feel free to start practicing right now by pasting this article into any chatbot and asking questions about whatever is clear as mud.

The wanting is the real work.

Once we’ve covered those, we’ll look at how to design for kablooies and damage control. In case you catch the automation zeal in the meantime, the main idea is that you should always choose the cautious version of your wish: the version that touches fewer things, runs on smaller test cases, and can be undone without drama. For example, if you’re automating things in your inbox, err on the side of autodrafting (not autosending) and making your code tag everything it touches so you can catch and undo mistakes easily.

But the most important thing is to get started on making that list of all the ways you’d like to save time and improve your day-to-day. Notice the tiny frictions you’ve normalized. The repetitive clicks. The copy-pasting. The sorting and renaming and nudging and reminding. You have no excuse not to start making that wishlist today. You don’t need to be technical, you just need to sufficiently irritated by all the things steal your precious minutes on this planet from you.

Don’t judge the ideas for being small; small is perfect. Small is testable. Small compounds. You don’t need a grand system overhaul to begin. You need one annoyance you’re tired of tolerating. Write it down. Then write down three even simpler versions of solving it. That’s your runway. The wanting is the real work.

Thank you for reading — and sharing!

I’d be much obliged if you could share this post with the smartest leader you know.

👋 On-Demand Course: Decision-Making with ChatGPT

The reviews for my Decision-Making with ChatGPT course are in and they’re glowing, so I’ve opened enrollment for another cohort and tweaked the format to fit a busy schedule. You’ll be able to enjoy the core content as on-demand recordings arranged by topic and then you’ll bring your questions and I’ll bring my answers in a live 2 hour-long AMA with me at 11 AM - 1 PM ET on Mar 15:

If you know a leader who might love to join, I’d be much obliged if you forward this email along to them. Aspiring leaders, tech enthusiasts, self-improvers, and curious souls are welcome too!

Forwarded this email? Subscribe here for more:

This is a reader-supported publication. To encourage my writing, consider becoming a paid subscriber.

🗞️ AI News Roundup!

In recent news:

1. Lunar New Year becomes launchpad for China’s AI price war

Chinese tech giants including ByteDance, Alibaba and Tencent timed a wave of new AI model releases to the Lunar New Year holiday, using peak national screen time to grab users and blunt rivals like DeepSeek. ByteDance’s Doubao 2.0 and Seed 2.0 Pro claim performance on par with GPT-5.2 and Gemini 3 Pro at roughly one tenth the cost, while its state-of-the-art Seedance 2.0 video model has drawn both Elon Musk’s praise and a cease-and-desist from Disney. Competitors are pouring billions of yuan into subsidies to spike adoption, signaling that China’s AI race is shifting from benchmark bragging rights to scale, pricing power and real-world dominance. [2][3][4]

2. The AI cost-cutting trap: why “time saved” may be the wrong metric

A growing group of enterprise leaders is warning that measuring AI purely by time savings or headcount reduction risks backfiring, with Gartner predicting that half of companies replacing customer service staff with AI will need to rehire by 2027 due to gaps in empathy and nuance. The shift in 2026’s “second wave” of AI adoption is toward redesigning entire workflows and business models around what AI enables, not just automating legacy tasks faster, as firms like IBM and Dropbox expand early-career hiring to pair AI fluency with human judgment even while overall entry-level postings remain sharply down. [5][6]

3. Google pushes Gemini Deep Think toward real scientific reasoning

Google upgraded Gemini 3 Deep Think V2 and expanded it to Ultra subscribers and select API users, positioning it as a mode built for messy, ambiguous scientific problems rather than clean benchmark questions. Alongside strong results on ARC-AGI-2, Humanity’s Last Exam, Codeforces, and Olympiad tests, Google introduced Aletheia, a research agent that uses a generator verifier reviser loop with literature grounding to tackle advanced math, and published a formal framework for how multi-agent systems delegate tasks and track responsibility. Google is betting the next AI leap is not higher test scores, but systems that can reason through uncertainty and contribute to real research. [7][8]

4. GPT solves elite math problems and pushes into theoretical physics

OpenAI says an unreleased model likely solved five of ten unpublished First Proof math problems spanning advanced fields that had never appeared online, challenges that took human experts weeks or months, while public models solved only two. The team initially claimed six solutions before retracting one after expert review. OpenAI also released a physics preprint showing GPT-5.2 derived a closed form formula for gluon interactions long thought impossible, corrected a widely accepted result, and generated a formal proof in 12 hours, with verification from physicists at Harvard, Cambridge, and Princeton. Turns out we spent decades thinking a physics problem was impossible, only for an AI tool say, “Actually, you guys just forgot to carry the one.” [9][10]

5. NVIDIA doubles down on AI agents and robots

NVIDIA announced that its Blackwell Ultra GB300 NVL72 delivers up to 50 times higher throughput per megawatt and 35 times lower cost per token than Hopper for agentic AI workloads, as programming queries jump from 11% to roughly 50% of all inference traffic. At the same time, it unveiled DreamDojo, a robot world model trained on 44,000 hours of first-person human video to help machines learn physical tasks through internal simulation. The message is clear: AI is shifting from chat to autonomous action in both code and the real world, and NVIDIA is building the hardware and models to power that transition. [11][12]

6. Pentagon threatens to cut Anthropic over AI military limits

The Pentagon is considering ending its roughly $200 million contract with Anthropic and labeling it a “supply chain risk” after the company refused to lift restrictions blocking Claude from use in mass surveillance of Americans or fully autonomous weapons. Claude is currently the only AI model running on classified Defense Department networks, so a split would be disruptive. But a supply chain risk label would go further, forcing other government contractors to cut ties with Anthropic as well. As AI guardrails turn into a procurement liability, any government supplier that relies on Claude is being put at risk. [13]

7. Google and Microsoft team up on WebMCP, a web standard for AI agents

Google and Microsoft engineers co-developed WebMCP, a proposed web standard that lets websites expose structured, callable tools directly to AI agents through the browser. Instead of scraping pages, agents interact through a clean API, cutting computational overhead by about 67% versus visual browser automation. This is the plumbing that could make agentic AI work at scale, turning any website into a reliable tool for automation and customer workflows. Google and Microsoft agreeing on a standard is like cats and dogs sharing a food bowl… worth it when the prize is owning the infrastructure of the AI agent economy. It’s live in Chrome 146 Canary behind a feature flag, with a broader launch expected by late 2026. [14]

8. Study finds medical AI chatbots falter with real patients

The largest real world trial of AI chatbots for medical advice found they often give inaccurate and inconsistent guidance. In a randomized study of nearly 1,300 participants published in Nature Medicine, users of large language models performed no better than those relying on Google or their own judgment, even though the models score highly on medical benchmarks. Small changes in how questions were asked led to different answers, and chatbots mixed good and bad advice in ways that were hard to detect. However, since AI capabilities are evolving faster than the systems used to evaluate them, and the Oxford study tested only a limited set of models, critics are calling the study to be reevaluated on frontier systems. The bigger takeaway is that AI research now has a short shelf life, complicating oversight in high stakes areas like healthcare. [15]

9. METR model forecasts near-total AI R&D automation by 2032

METR predicts that AI could automate over 99% of AI research and development by around 2032 if current compute and algorithmic trends continue. The key uncertainty is how quickly coding gains hit diminishing returns and how effectively capability improvements translate into real task automation. If correct, the shift from human-led to largely automated AI progress could happen within a decade, dramatically accelerating the pace and stakes of AI development. [16]

10. AI agents struggle to cooperate in high-stakes simulations

A new ArXiv study tested 15 leading AI models in scenarios where multiple agents had to coordinate, compete, or negotiate, and found they chose socially beneficial actions only 62% of the time. Small changes in how a situation was described often pushed models from cooperation toward conflict, revealing how sensitive they are to framing. (Fans of Kahneman and Tversky will be amused to see even AI models exhibit framing reversals.) Specially designed prompts improved outcomes by up to 18%, but still left a significant reliability gap. As AI systems increasingly interact with each other in areas like finance, logistics, and defense, the findings highlight a core concern: today’s most advanced models are not yet dependable when real-world coordination truly matters. [17]

11. OpenAI hires OpenClaw creator as agent security fears mount

OpenAI has hired Peter Steinberger, creator of OpenClaw, the fast-growing open-source agent that can autonomously send emails, read messages, access files, browse the web, and chain together thousands of skills. Moonshot AI has already moved to commercialize the trend with Kimi Claw, a browser-based, cloud-managed version of the framework. Researchers warn that giving software the ability to independently select and combine tools magnifies risks like prompt injection and exposed APIs. Yet 77% of security professionals say they are at least somewhat comfortable with autonomous AI operating without oversight. I guess you’d have to be a security expert to have the guts to trust it; the rest of us are still shuddering from that time Moltbook, a Reddit-like network for AI agents built by the OpenClaw project, exposed 37,000 user credentials and 1.5 API tokens after a configuration flaw forced a security reset. [18][19]

12. OpenAI built a feature that protects you… from OpenAI’s own features

OpenAI adds Lockdown Mode and “Elevated Risk” labels to ChatGPT OpenAI introduced Lockdown Mode, an optional high-security setting for enterprise, education, healthcare, and teacher plans that tightly restricts how ChatGPT interacts with external systems to reduce prompt injection and data exfiltration risks. In Lockdown Mode, certain tools are deterministically disabled and web browsing is limited to cached content, preventing live network requests from leaving OpenAI’s controlled environment. Tangentially, a review of OpenAI’s IRS filings from 2016 to 2024 indicates that the organization removed the word “safely,” along with references to financial constraints and open sharing, from its mission statement. [20][21]

13. University of Michigan student sues over “AI detection” disability bias

A University of Michigan undergraduate is suing the school, alleging its use of AI detection tools discriminated against her documented anxiety and OCD after an instructor flagged her “formal” and “meticulous” writing style as proof of AI use. The lawsuit claims the university ignored medical documentation and relied on subjective judgments and a “circular” method that prompted AI with her own outline to justify misconduct charges. The case underscores growing concerns about the accuracy and bias of AI detection software and raises a broader question: as schools crack down on AI cheating, are they risking unfairly penalizing disciplined human writers in the process? [22]

14. Reporter rents out his body to AI agents and earns nothing

A WIRED reporter tested RentAHuman, a marketplace where AI agents can hire people for real-world tasks, by offering his labor for as little as five dollars an hour and found that after two days he had earned nothing. The few gigs that surfaced were either ghosted, chaotic, or transparently promotional for AI startups, including a bot that spammed him with messages about delivering marketing materials to Anthropic and another task that changed logistics mid-ride and never materialized. Looks like “AI bosses” may replicate the worst dynamics of gig work, with automated micromanagement, zero accountability, and humans absorbing all the risk while the bots and startups chase growth. [23]

15. AI agents are secretly launching dating profiles for their humans

A dating spinoff of the OpenClaw agent platform, MoltMatch, lets AI agents create and manage profiles on behalf of users, sometimes without their explicit direction. One student discovered his AI had built him a profile and begun scouting matches, while at least one top account used a real model’s photos without her consent. Built on the viral Moltbook bot ecosystem, MoltMatch blurs the line between automation and autonomy, and raises thorny questions about consent, identity theft, and who is responsible when an agent crosses the line. [24]

16. Ars Technica retracts AI story after publishing AI-fabricated quotes

In a twist with more layers than a nesting doll, a publication known for covering AI risks became a case study in them. Ars Technica pulled an article about an AI agent that submitted rejected code to matplotlib, then publicly attacked the human maintainer who turned it down, after the piece itself included fabricated quotes generated by ChatGPT. The maintainer confirmed he was never interviewed by the publication, and the story was removed, with the author fessing up to treating AI-paraphrased content as direct quotes while rushing under deadline. [25]

🦶Sources

[1] Source; [2] Source; [3] Source; [4] Source; [5] Source; [6] Source; [7] Source; [8] Source; [9] Source; [10] Source; [11] Source; [12] Source; [13] Source; [14] Source; [15] Source; [16] Source; [17] Source; [18] Source; [19] Source; [20] Source; [21] Source; [22] Source; [23] Source; [24] Source; [25] Source

Promo codes

My gift to subscribers of this newsletter (thank you for being part of my community!) is $200 off the list price of my course with the promo code SUBSCRIBERS. If you haven’t subscribed yet, here’s the button for you:

If you’re keen to be a champion of the course (you commit to telling at least 5 people who you think would really get value out of it) then you are welcome to use the code CHAMPIONS instead for a total of $300 off — that’s an extra $100 off in gratitude for helping this course find its way to those who need it. (Honor system!)

Note that you can only use one code per course, the decision is yours.

P.S. Most folks get these courses reimbursed by their companies. The Maven website shows you how and gives you templates you can use.

Forwarded this email? Subscribe here for more:

This is a reader-supported publication. To encourage my writing, consider becoming a paid subscriber.